CAP Theorem

CAP Theorem states that any distributed data store can only provide two of the following three guarantees:

- Consistency Every read receives the most recent write or an error.

- Availability Every request receives a (non-error) response, without the guarantee that it contains the most recent write.

- Partition tolerance The system continues to operate despite an arbitrary number of messages being dropped (or delayed) by the network between nodes.

When a network partition failure happens, it must be decided whether to

cancel the operation and thus decrease the availability but ensure consistency or to proceed with the operation and thus provide availability but risk inconsistency.

– Wikipedia CAP Theorem

Based on that CAP theorem, so here we are going to talk about how to maintain the Consistency and Partition Tolerance between Cache and Database data where there is issue happened.

Consistency

There are 3 type of Consistency:

Strong Consistency: All accesses are seen by all parallel processes (or nodes, processors, etc.) in the same order (sequentially)

Meaning: what we read is what someone write,Weak Consistency:

- All accesses to synchronization variables are seen by all processes (or nodes, processors) in the same order (sequentially) - these are synchronization operations. Accesses to critical sections are seen sequentially.

- All other accesses may be seen in different order on different processes (or nodes, processors). - The set of both read and write operations in between different synchronization operations is the same in each process.

Meaning: no guaranteed you will read the value after write, but will try best to provide the written data in shortest timeFinal ConsistencyTHIS IS BEST WE CAN DO AND THIS WHAT WE ARE LOOKING FOR Special type ofWeak Consistency

Final Consistency between Cache and DB - Cache Policy

To ensure the Final Consistency between Cache and DB, We have following Cache Policy we can implement.

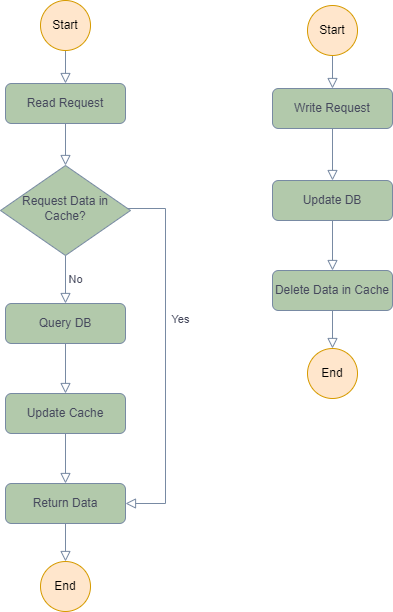

1. Cache-Aside

####** Why delete data in cache not update data in cache?

- Performance Consideration: if data in cache is calculated, then update might cause more calculation(maybe joint on other set of data etc.), which is expensive, just delete is cheapest way and also fit

Lazy Initializepolicy. - Race Condition while update Data: if Thread1 suppose update first and Thread2 update second, Thread1 should complete 2 steps (see picture above) as atomic process before Thread2 start, but because the network reason, after Thread1 update database, Thread2 start, and Threa2 complete Update Database and update cache earlier than Thread1, then the data in cache is Thread1 data not Thread2 data.

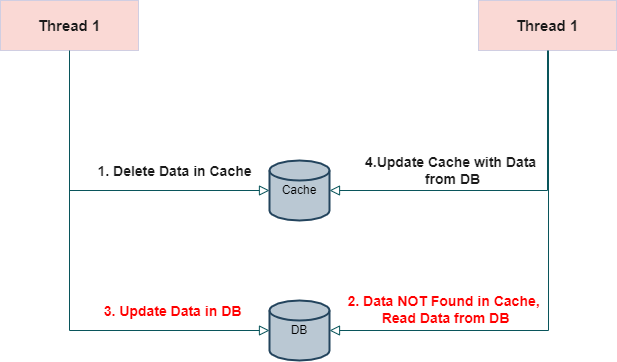

####** Why delete database first, not Cache data?

In the above Pciture

- Thread 1 is write request start first: 1. Delete data in Cache

- Thread 2 is read request and come next: 2. Query Data in Cache, and data is not in Cache - delete by Thread 1 - so go DB and read data from there

- Thread 1 now is going to add new data in DB - DB data is updated to the latest data

-

Thread 2 after read the data from DB, now update cache with the data it read from DB, which is NOT latest data Thread 1 just write in

- Now the Data and Cache is out of sync…

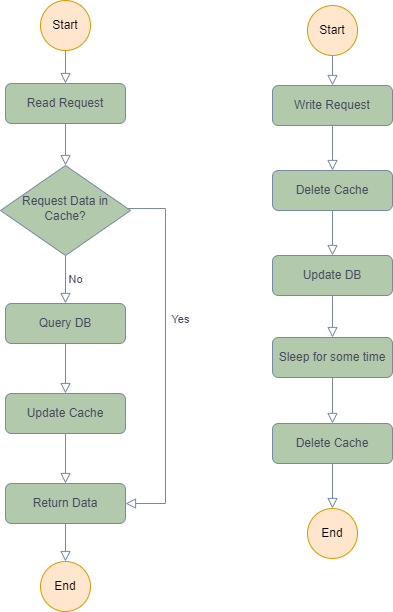

####** Fuck it! I just want to delete Cache first then update DB, can I?

Yes, you can. But you need Double Delete strategy

in the write request - Sleep for some time that time ususally is little bit bigger than the read request time expense.

####** So…is Cache-side Policy guarantee “Consistence” between DB and Cache?

No!!!!!

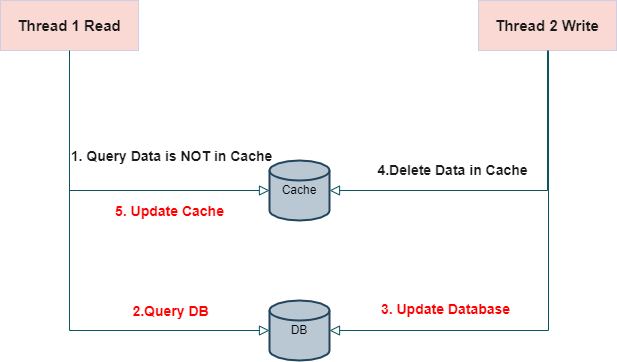

In the above Picture:

- Thread 1 is read request, and try to query the data is not Cache.

- Thread 1 query the database and get the data.

- Thread 2 is write request, and it update the database after Thread1 read the data from Database.

- Thread 2 Delete Data from Cache, and complete the process.

- Thread 1 now update the cache with the old data it read from Database.

In this situation, the data in Cache and DB is out of sync.

But review the possibility of this scienario:

- The data is not cache is less common.

- Usually Read DB process is faster than write DB, very rare case that Thread1 query DB and then update Cache after Thread2 complete the proces that update database and Delete cache.

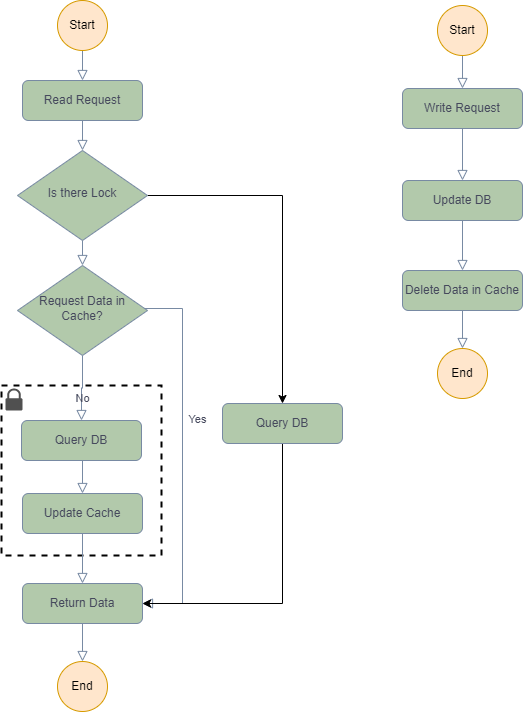

2. Better Version of Cache-Aside Policy

in the above picture, we add Lock when read request query DB and update the Cache, this make query DB and update cache become atomic process to prevent any dirty read.

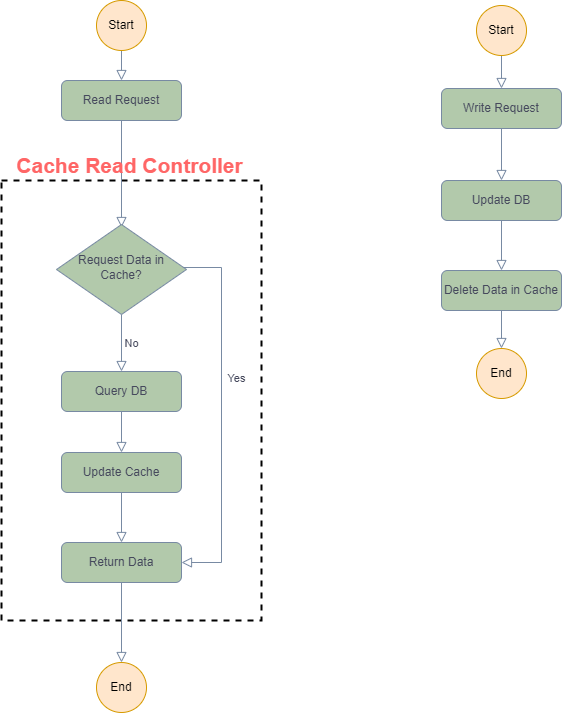

3. A Better Better Version of Cache-Aside Policy: Read-Through Policy

Read-Through Policy is very similar like Cache-Aside Policy, but different is Read Request will NOT handle the Query DB and Update Cache part, instead, it will wait for another service provider to query DB and Update Cache, and then just read from Cache.

in this version, we created Cache Read Controller, the read request only deal with that controller, not have access with Cache directly, and the Cache Read Controller handle the query DB and update Cache, and will eliminate the multi threads issue.

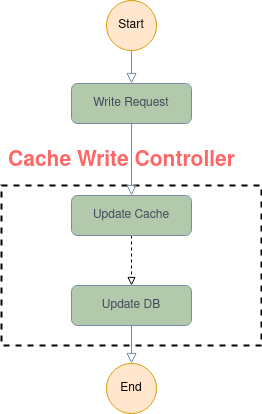

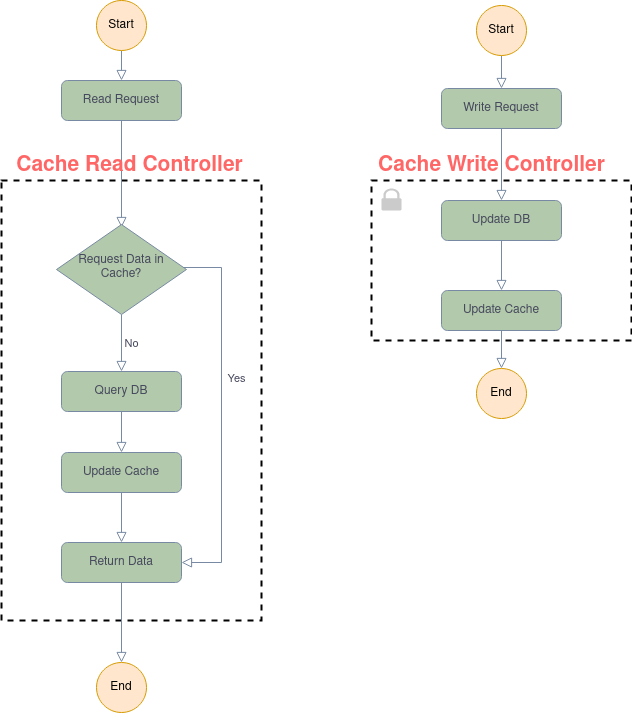

4. Write-Through Policy

In the Read-Through policy, we add Cache Read Controller layer at the read request process, now, Write-Through policy add 1 more layer on write request process which have better handling on write process:

But different as Cache-Aside policy, Write-Through policy does NOT delete cache, but update

The Write-Through policy update database and update cache 2 steps suggest to wrap up with lock, so it will prevent the multiple write request has race condition issue and cause the inconsistency between DB and Cache.

Usually Write-through policy will implement at the high requirement of consistency environment, but it’s high expense of resource should be considered as well.

5. Write-behind Policy

Write-behind Policy means only update cache but not database, the update of database will be implemented in an asychronize process.